Ángel Alex ander Cabrera

Discovery of Intersectional Bias in Machine Learning Using Automatic Subgroup Generation

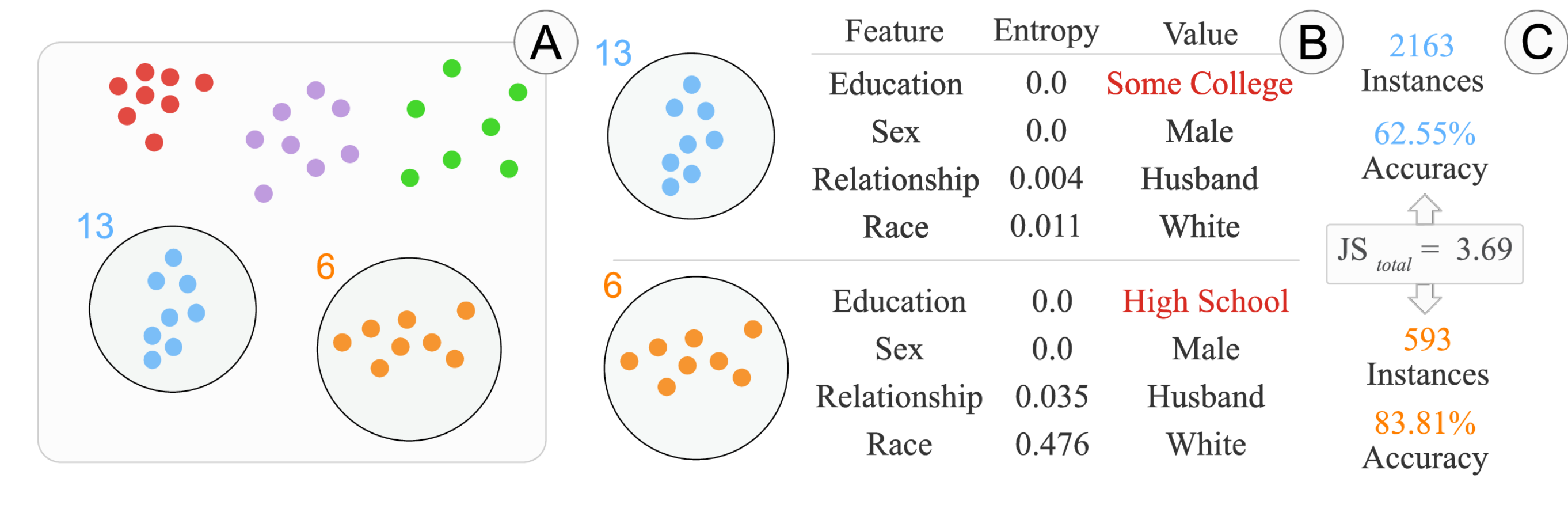

As machine learning is applied to data about people, it is crucial to understand how learned models treat different demographic groups. Many factors, including what training data and class of models are used, can encode biased behavior into learned outcomes. These biases are often small when considering a single feature (e.g., sex or race) in isolation, but appear more blatantly at the intersection of multiple features. We present our ongoing work of designing automatic techniques and interactive tools to help users discover subgroups of data instances on which a model underperforms. Using a bottom-up clustering technique for subgroup generation, users can quickly find areas of a dataset in which their models are encoding bias. Our work presents some of the first user-focused, interactive methods for discovering bias in machine learning models.

Citation

Discovery of Intersectional Bias in Machine Learning Using Automatic Subgroup GenerationÁngel Alexander Cabrera, Minsuk Kahng, Fred Hohman, Jamie Morgenstern, Duen Horng (Polo) Chau

ICLR - Debugging Machine Learning Models Workshop (Debug ML). New Orleans, Louisiana, USA, 2019.

BibTex

@article{cabrera2019discovery, title={Discovery of Intersectional Bias in Machine Learning Using Automatic Subgroup Generation}, author={Cabrera, Ángel Alexander and Kahng, Minsuk and Hohman, Fred and Morgenstern, Jamie and Chau, Duen Horng}, journal={Debugging Machine Learning Models Workshop (Debug ML) at ICLR}, year={2019}}